Contents

High level architecture of geographic map delivery system

Server-side architecture, Azure cloud deployment case

Server-side, ASP.NET Core middleware pipeline on Azure cloud VM Scale Set.

NodeController, simple alternative to Kubernetes, Redis, Micro Services, Nano Services, etc.

NodeController resource balancing

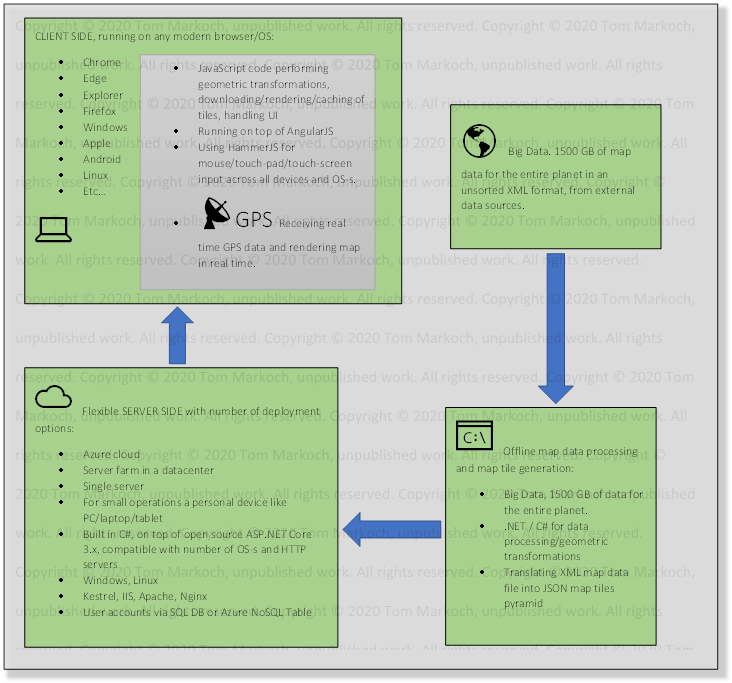

High level architecture of geographic map delivery system

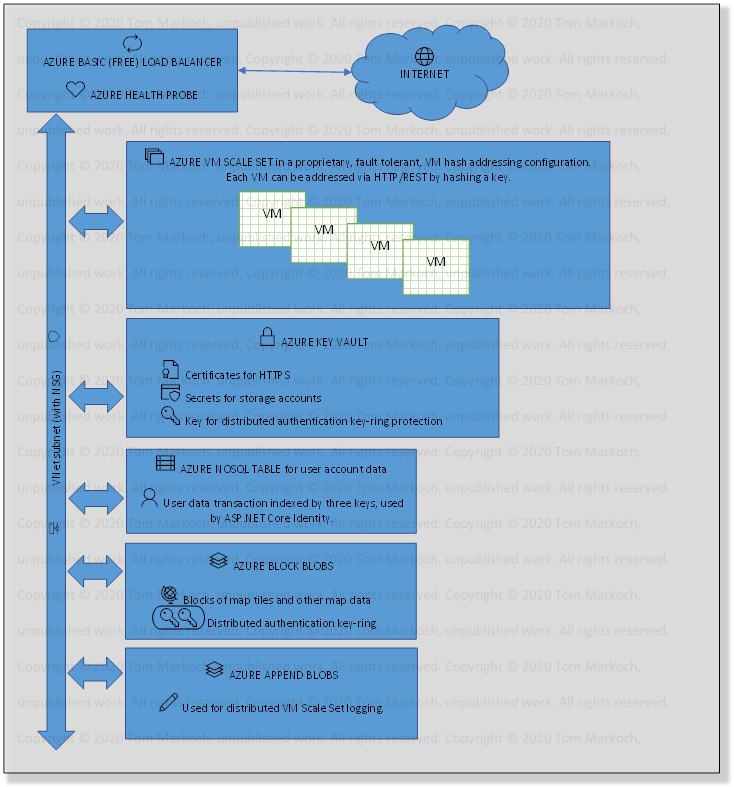

Server-side architecture, Azure cloud deployment case

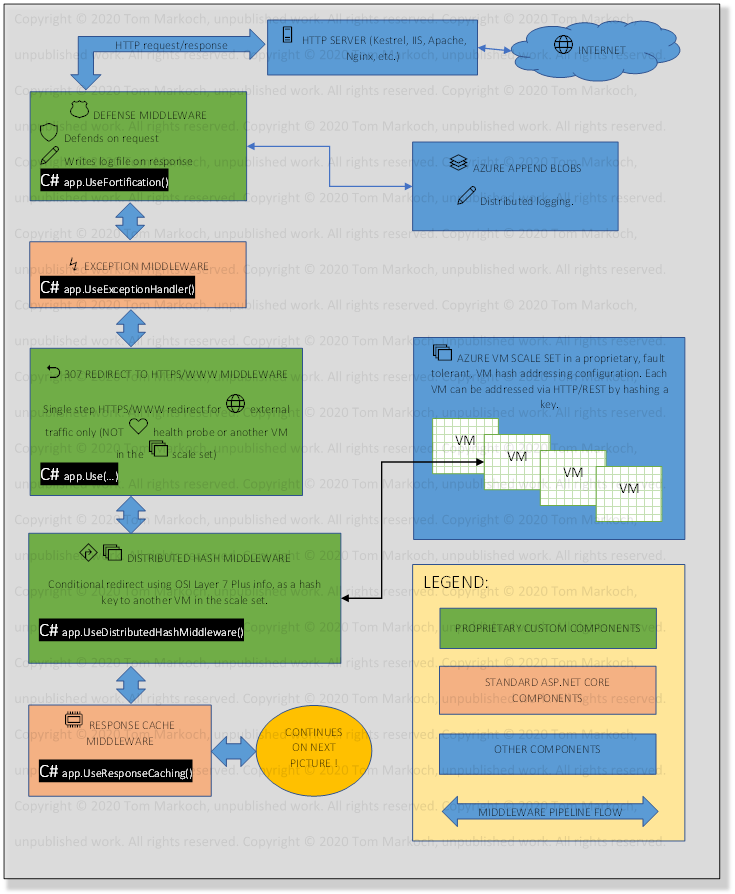

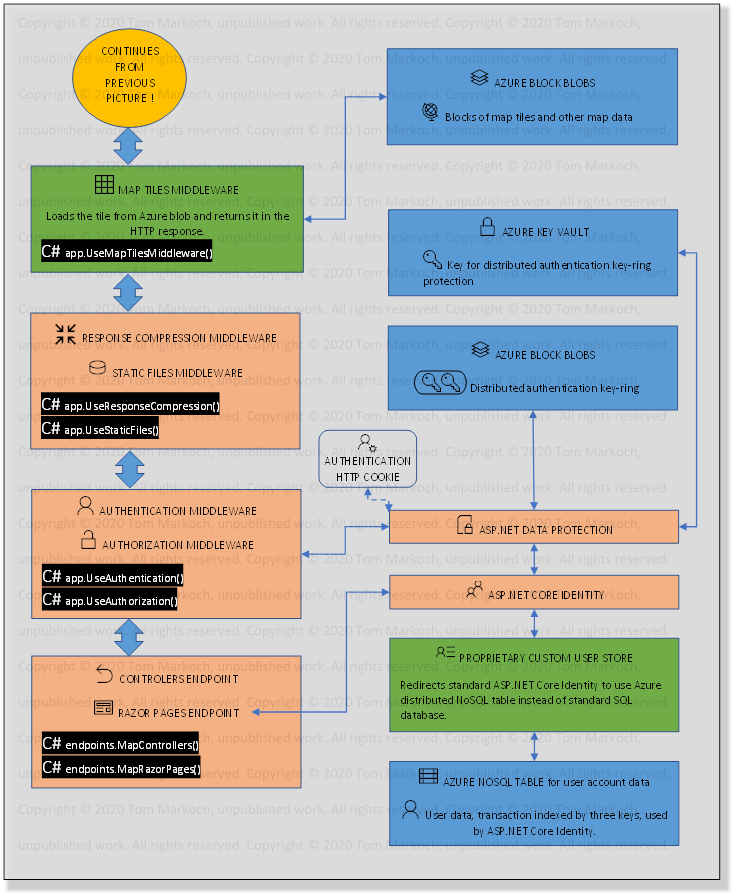

Server-side, ASP.NET Core middleware pipeline on Azure cloud VM Scale Set.

Map server is built on top of open source ASP.NET Core. Flexible solution capable of running on all major operating systems and HTTP servers. Can run on a cloud like Azure cloud, on a server farm, individual server, or even a personal device.

ASP.Net Core middleware pipeline configured to run on Azure cloud VM Scale Set, only most important components shown.

NodeController, simple alternative to Kubernetes, Redis, Micro Services, Nano Services, etc.

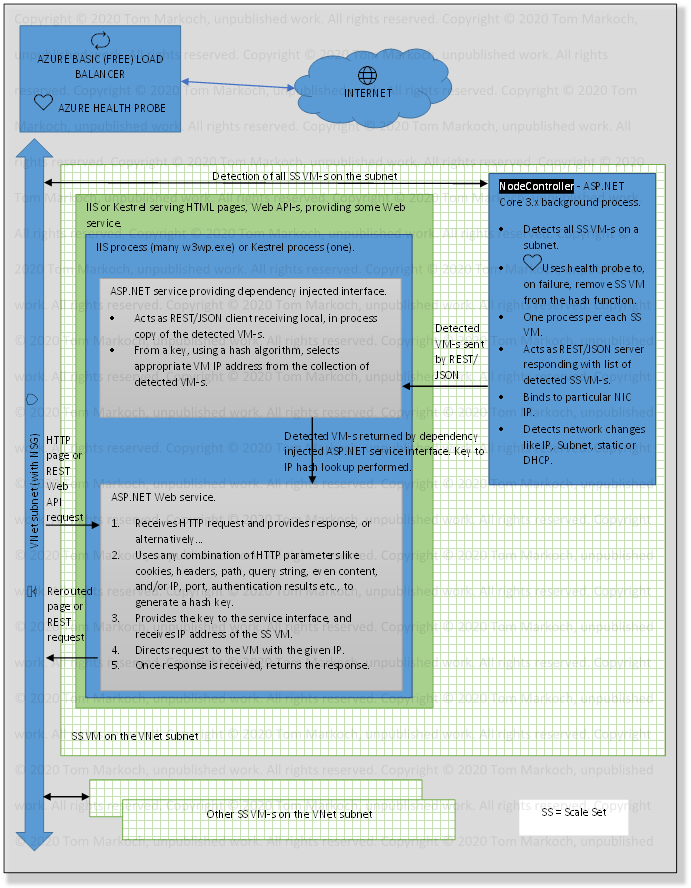

Node controller is a system that distributes software objects across a number of computing nodes on VM-s. Software objects can be anything that consumes computer resources such as memory or CPU time. Only requirement is that each software object a has small footprint, for example memory or CPU requirements, compared to the total footprint of all software objects added together. Key characteristics of NodeController system:

§ Fully automatic and well-balanced distribution of CPU, memory and other resources utilization, across all nodes or VM-s.

§ All nodes or VM-s are equal. There is no single point of failure that could bring the whole system down.

§ Extremely flexible. Uses hash function to distribute software objects across nodes or VM-s. As a hash key, any information available at OSI layer 7 (all IP, HTTP information, etc.) can be used. Even encrypted user or user group authentication info can be used as a hash key. In addition, background processes can be distributed by their hashed ID-s.

§ Automatically detects nodes or VM-s network configuration and generates key to IP hash function. Automatically updates the hash function in the case nodes or VM-s are added or removed, or in the case of network change like IP change, subnet change, for either static or DHCP configurations.

§ Fault tolerant, uses health probes in a same way typical cloud load balancer uses them, and removes failed node or VM from the hash function.

§ Very simple installation. Comes down to copying over few files and setting OS to start the executable on a system startup.

§ Hight performance. Can easily handle loads of tens of thousands of requests per second, per node or VM. Sub 1% CPU utilization to detect all the nodes on the subnet and maintain the key to IP hash function.

§ Extremely easy integration into an ASP.NET Core projects. First NodeController ASP.NET service is added to the project, which includes interface containing hash function that maps key to the node or VM IP address. Then using injection, the hash function interface is simply added where needed, using standard ASP.NET interface injection methods.

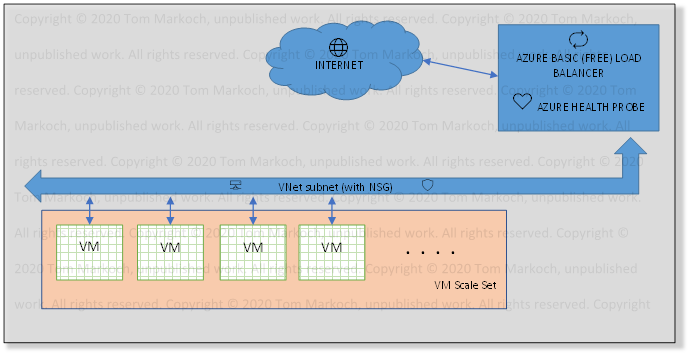

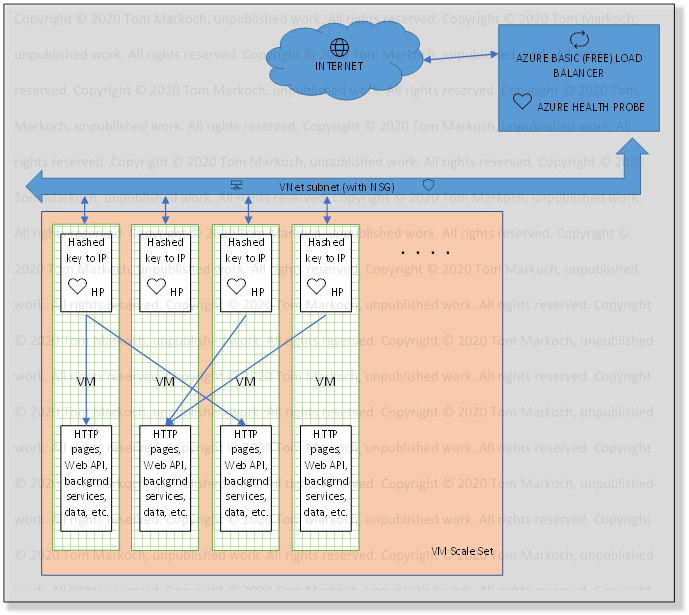

To summarize, for example in the Azure deployment case, system transforms regular Scale Set VM-s:

…into hash key addressable Scale Set VM-s:

Detailed description of the system operation on a node or VM (for simplicity, not showing background processes that can also be controlled by NodeController system). In this example NodeController is used on Scale Set VM-s:

NodeController resource balancing

NodeController can automatically balance resources, such as

memory or CPU, used by a large number of software objects distributed across a

number of nodes or VM-s. How does NodeController automatically accomplish this

task? Let ![]() (random variable m) be amount of

computer resources (memory or CPU etc.) used by software object m. If we

allocate n software objects to a node or VM, each using random amount of

computer resources, what is the total amount of resources

(random variable m) be amount of

computer resources (memory or CPU etc.) used by software object m. If we

allocate n software objects to a node or VM, each using random amount of

computer resources, what is the total amount of resources ![]() used on that node or VM?

used on that node or VM?

![]()

Let ![]() be probability density distribution

for random variable

be probability density distribution

for random variable ![]() . Then mean and variation for

. Then mean and variation for ![]() are defined as:

are defined as:

![]()

![]()

Also, let normalized ![]() be defined as:

be defined as:

![]()

If ![]() then, according to the

Central Limit Theorem, probability density distribution for normalized

then, according to the

Central Limit Theorem, probability density distribution for normalized ![]() converges to normal distribution

converges to normal distribution ![]() :

:

![]()

As we can see, by the mathematical nature of the problem, resource utilization (memory, CPU, etc.) becomes very predictable for large n, and well balanced across multiple nodes or VM-s.

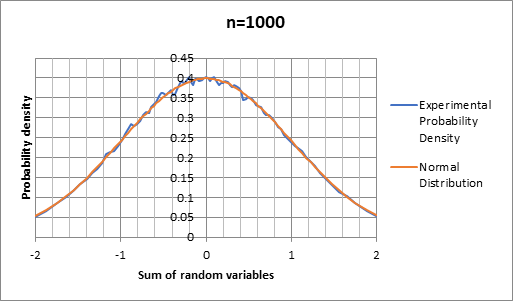

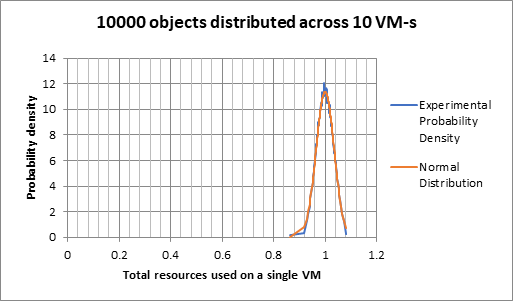

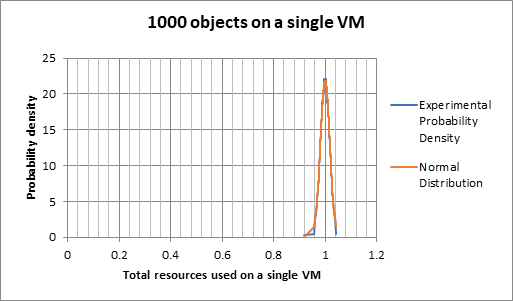

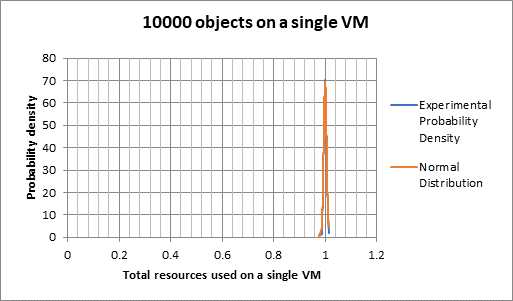

As an experiment let’s calculate normalized probability

density function for random variable ![]() for n = 1000, and let’s see how

it converges to

for n = 1000, and let’s see how

it converges to ![]() . We’ll use random variables

. We’ll use random variables ![]() with uniform distribution

with uniform distribution ![]() . As we can see, for n = 1000,

distribution of

. As we can see, for n = 1000,

distribution of ![]() already matches very well the

expected normal distribution:

already matches very well the

expected normal distribution:

Let’s perform few more experiments, but this time let’s

normalize ![]() by dividing with

by dividing with ![]() .

.

In above examples we can see how precisely resource utilization can be predicted, depending on the number of objects we are distributing across the VM set and number of VM-s in the set.

Copyright © 2018-2021 Tom Markoch, unpublished work. All rights reserved.

Edge, Explorer, Chrome, Firefox, Windows, Apple, Android, Linux, AngularJS, HammerJS, OSM, OpenStreetMap, Azure, .NET, ASP.NET, .NET Core, ASP.NET Core, Razor, Kestrel, IIS, Apache, Nginx, Kubernetes, Redis and other trademarks, registered trademarks, service marks and company names cited herein are the property of their respective owners.